Microservices Management in Behavox: Part 1 - Why not Kubernetes?

Hey, it's Kirill Proskurin, Head of DevOps Engineering at Behavox.

Not choosing Kubernetes in 2022 sounds like a radical position - and it raises a lot of questions. Everywhere you go, it seems like everyone is using Kubernetes for container management.

Here at Behavox, we're trying to avoid blindly following the crowd and weighing every decision against our use cases. So, today, we're going to talk about our assessment of Kubernetes with respect to Behavox operations.

The paradox of choice?

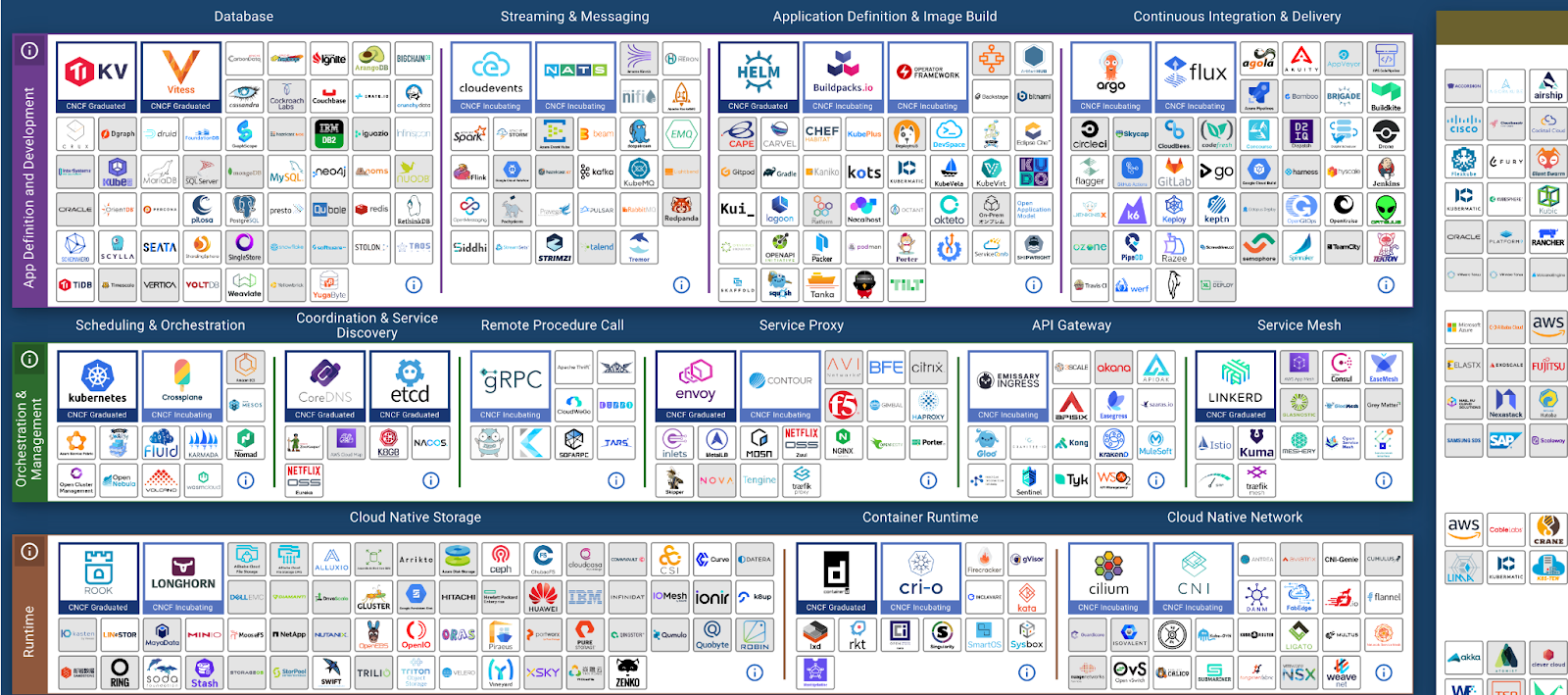

Most of you are familiar with Cloud Native Landscape, it looks something like this and is constantly growing:

To be fair, it's not 100% about Kubernetes - or at least it wasn't the original idea. But in reality, most of what you see is supposed to be used with Kubernetes.

Kubernetes is a Lego set and if that looks exciting to you - godspeed! I can't help but wonder how a new Kubernetes user must approach evaluating all those tools to choose one in each category? Which CI\CD system to choose? Which Service Mesh? What about the containers building toolkit and containers definition and configurations? And so on.

It's an example of a paradox of choice. Some could take it as a benefit, but in reality, evaluating all the possible solutions is unrealistic so you have to make a semi-informed decision. And we prefer to make weighted, informed, and data-driven decisions.

Kubernetes is the new POSIX?

The other idea that I keep hearing is that Kubernetes is a new POSIX or a new "Universal Cluster API", meaning you can switch your Kubernetes provider or go on-prem just by switching the Kubernetes endpoint URL.

That is mostly untrue. Even though API could be the same, everything else could be very different. The way a Kubernetes provider does monitoring, RBAC, auth, auto-scaling, ingress, networking, persistent and object storage, and so on could be vastly different.

Kubernetes is not that complex?

It is. It consists of multiple components that have to be configured and managed separately. And even if you're using a cloud Kubernetes provider, you still need to wrestle with hundreds of APIs and concepts.

I suggest a simple mental experiment to anyone who disagrees:

Try to explain in 30-60 minutes or so how to install Kubernetes with auth, RBAC, persistent storage, and proper secrets management system* to a person who never managed it before. Preferably without providing a 20000 LoC Ansible playbook with 100+ variables.

* No, default Kubernetes Secrets should not be used to store any sensitive information.

If you managed to do it - that is really impressive! And what about explaining how it all works with some level of detail?

I'd argue that not only Kubernetes itself is complex, everything around it is complex as well. Contrary to popular belief, just running a Kubernetes cluster is not even the beginning of the journey. It's merely a prerequisite.

If you're doing Kubernetes management for the living you probably don't see an issue here. But the fact that there are multiple companies with the single goal of simplifying Kubernetes management is telling, and that is on top of already simplified cloud providers' solutions.

There are also multiple simplifications of Kubernetes like k3s, minikube, and MicroK8s, indicating the need to simplify Kubernetes deployment and management.

"Despite 6 years of progress, Kubernetes is still incredibly complex," Drew Bradstock, product lead for Google Kubernetes Engine

Application deployments are easy, right?

So we have a running Kubernetes instance, now what? You'll quickly realize that yaml deployment definitions are not scalable. So Helm? Kustomize? Jsonnet? Probably dozens of other toolkits.

It's a paradox of choice all other again. It's great that there are multiple solutions to the problem, it's way better than having none! But, for us, it looks like an indication of a problem. Was yaml a good choice to begin with?

A "Just grab this Helm chart" approach usually won't work for your use case in our experience and Helm, even after 3 major releases, still lacks in functionality.

Also, for a system that could do or be integrated with so many things, Kubernetes is surprisingly narrow in terms of supported runtimes. At the moment of writing, it only supports a few and all of them are just simplified Docker runtimes.

Is Kubernetes that bad?

No, of course not. In fact, Kubernetes is great, so great people are genuinely surprised when they hear that we don't use Kubernetes. It's so popular we kinda have to write articles like this one just to explain "Why not Kubernetes?".

I also like the idea of the Kubernetes Operator Pattern. It brings even more complexity but unlocks the deployment of things that were not possible before.

What I'm trying to say here is that Kubernetes is not a silver bullet and it should not be a religion. I'd advise against going all in on Kubernetes if you don't have a dedicated team to manage it in the long run, and a Platform team to smooth the day-to-day operations with it.

Behavox use cases

Behavox has multiple use cases for running containers:

- On-demand Development Infrastructure CI runtimes

- ML Training Infrastructure

- Internal self-service runtime platform

- Production environments

We chose Kubernetes for the first two use cases: CI and ML Infra since there are highly mature solutions already available. They're also limited to one cluster and one account each and we can use AWS EKS to simplify the management of the cluster.

But the other two are way more complicated. We're going to talk about our internal self-service platform in the other article, so let's focus on Production.

Behavox has multiple isolated Production accounts, both Cloud and On-Prems. Running Kubernetes on them for us will mean running hundreds of isolated Kubernetes clusters.

If we go with that decision we'd need to hire and train a dedicated Kubernetes team. But even with that team, running multiple Kubernetes clusters on our customers' on-prem environment sounds like a huge gamble. Those environments are less predictable since we have way less control over them.

Conclusions

We have decided that currently, Kubernetes is way too complicated for our Production use case. We wanted something simple, something with a small number of moving parts. Something that would not require high maintenance.

And we think we found it.

Subscribe and stay tuned for the next article in that series to find out what is it and why we chose it!

UPD: Part 2