Microservices Management in Behavox: Part 2 - Nomad

Hey, it's Kirill Proskurin, Head of DevOps Engineering at Behavox.

In the previous post of this series, we wrapped up on the note, that, while Kubernetes is great - it's overly complex and we need something simpler.

There are not many other options left on the market since Mesos and Docker Swarm are more or less dead. So what is left?

Hashicorp Nomad

The First Nomad release is dated to September 2015, the same year when Kubernetes 1.0 dropped, and it lived under Kubernetes' shadow ever since - even while it's being used by many.

While Nomad and Kubernetes share the same ideas as Google's Borg, Nomad was designed in a slightly different way than Kubernetes.

The major differences are:

1.Plugable drivers system with the aim to support multiple types of workloads (not only Docker or containers in general)

2. Simplicity

Let's briefly talk about those differences.

Multiple types of workloads

You can run many different things in Nomad. Docker containers - obviously, but also Podman, Java or any binary, QEMU VMs, Firecracker, LXC, and many many more.

You may not need any of this, but it's a good design, and one of Hashicorp's goals is to meet their client where they are. They are not trying to say "Hey, just migrate all your code to containers and we can support it" - you can start with the things you already have, just with Nomad benefits on top of it.

Simplicity: moving parts

Nomad management as a service is significantly easier than Kubernetes because of some smart design choices, with the overall goal to be as simple as realistically possible.

Nomad is advertised as a single binary deployment. Meaning, to run Nomad, all you need is to download, configure and start a single binary - that is it. For comparison, for Kubernetes you'd need to manage at least six:

- kube-apiserver

- kube-scheduler

- kube-controller-manager

- kubelet

- kube-proxy

- etcd cluster

Some would say that it's fine, and probably a good design to be able to scale the solution. And there are single binary Kubernetes distributions well.

All true - but still, we'd prefer a solution that was designed to be simple from the get-go, especially in our use case of hundreds of isolated environments.

And as for scale, seems like Nomad has no real issues with that, see the Two Million containers challenge: https://www.hashicorp.com/c2m

But wait, you'd argue that Hashicorp is oversimplifying things here and Nomad without the Consul and Vault is relatively useless so it's not a single binary!

I agree - and looks like it's been said so many times that Hashicorp stepped in and fixed that in Nomad 1.4 with the addition of native service discovery, secrets storage, and KV storage. So, hopefully, that argument is closed for now but in reality, you would still need to deploy Consul and Vault to use Nomad in a more realistic production context.

It is worth pointing out that integration between Nomad, Consul, and Vault is seamless, quick, and super easy.

More on that later.

Simplicity: features

If you'd compare the feature set of Kubernetes to Nomad it's absolutely and undeniably clear that Kubernetes can do so much more (with containers).

If you need some of those features - you need Kubernetes.

But what if all you need is:

- Be able to run a workloads

- Be able to discover and load-balance the running services

- Be able to keep sensitive information in a secure storage

- And do all that in a simple and manageable manner

I'd argue that this is what the ~80% of the Kubernetes users really need and that is what Nomad does easily. With the addition of Consul and Vault it starts to do it significantly better than "naked" Kubernetes.

You still can do advanced stuff with Nomad like a Service Mesh via Consul Connect, L3 networking via CNI, and persistent storage via host volumes or CSI.

Also, fewer features mean a less bloated and complicated codebase which usually means fewer bugs. We're very happy with the Nomad stability so far.

Simplicity: DSL

Nomad, like all other Hashicorp products, uses an HCL2 language for configuration.

Here is how simple Nomad job looks like in HCL:

job "redis" {

datacenters = ["dc1"]

group "cache" {

network {

port "db" {

to = 6379

}

}

task "redis" {

driver = "docker"

config {

image = "redis:6.0"

ports = ["db"]

}

resources {

cpu = 500

memory = 256

}

}

}

}HCL2 is significantly better than YAML and in the majority of cases will not require any additional templating engines on top of it like you most certainly do in Kubernetes.

You can natively do things like:

- Local and global variables

- Templating and formating

- Type validation

- Multiline "here doc" string

- Close to a hundred filters, expressions, transformations, etc

- And many more

I'd argue that virtually no one is using Kubernetes without any abstractions on top of the yaml files, so was yaml a good choice in the first place?

HCL, in the end, is transformed into JSON, which is great if you want to introduce some additional validation or tooling without the need to worry about HCL syntax.

Simplicity: Service Mesh

If you'd add a Consul to the mix you will get a better service discovery, KV storage, and, optionally, a fully functional Service Mesh implementation in Nomad via Consul Connect.

Consul Connect uses Consul as a control plane for the Envoy proxy and Consul takes all the hard work of configuring and even launching the Envoy for you.

All you'll need to do is to tell Nomad that you need a Service Mesh connectivity here by passing:

connect {

sidecar_service {}

}and by telling the other service to where you want it to connect via passing:

connect {

sidecar_service {

proxy {

upstreams {

destination_name = "<YOUR_CONSUL_SERVICE_NAME>"

}

}

}

}That is more or less it. Now you have an Envoy sidecar running inside your Nomad group (same as Pod in Kubernetes) and you can use it to connect to the needed service while having an mTLS auth and traffic encryption for free.

To be fair, it will become slightly more complicated when you'll need multiple upstreams, Ingress gateway, or Termination Gateway

Features: Artifacts

Nomad allows you to do a few great tricks, let's start with the artifacts.

In the Nomad job HCL2 file, you can define an artifact block that will download... an artifact at the container start.

For example:

artifact {

source = "https://example.com/file.tar.gz"

destination = "local/some-directory"

options {

checksum = "md5:df6a4178aec9fbdc1d6d7e3634d1bc33"

}

}That feature is super useful for many use cases but it helped us tremendously at the beginning of the migration from non-container deployments to containerized ones.

If you're not starting from scratch, you will already have a lot of software in the form of packages or binaries. Asking all teams to bake their software into containers could be unrealistic in a short term.

Since Behavox uses mostly Java for in-house applications, we were able to use a single Java Docker image for all applications and put the application itself into it via artifacts.

That was a straightforward and clear solution, also updating of Java version for security fixes was a breeze, since you only need to update one image.

As far as I know, there is no native way of doing something like that in Kubernetes - unless you want to put a file as a base64 in a ConfigMap?

Features: Templates

Another great feature of Nomad is templates.

You can create a file inside your container by defining something like this:

template {

data = <<EOH

---

bind_port: ${local.bind_port}

scratch_dir: {{ env "NOMAD_TASK_DIR" }}

node_id: {{ env "node.unique.id" }}

service_key: {{ key "service/my-key" }}

EOH

destination = "local/file.yml"

}In the example above I'm mixing the Nomad local variables, environment variables, and Consul KV key. Yes, it's that simple.

What about the secrets? You can use native secrets or production-grade Vault ones:

template {

data = <<EOF

AWS_ACCESS_KEY_ID = "{{ with secret "secret/data/aws/s3" }}{{.Data.data.aws_access_key_id}}{{end}}"

EOF

env = true

}Remote template? Easy:

artifact {

source = "https://example.com/file.yml.tpl"

destination = "local/file.yml.tpl"

}

template {

source = "local/file.yml.tpl"

destination = "local/file.yml"

}I'd be surprised if you'd prefer to work with ConfigMaps after using Nomad templates!

And I'd advise to never use Kubernetes native Secrets for... secrets.

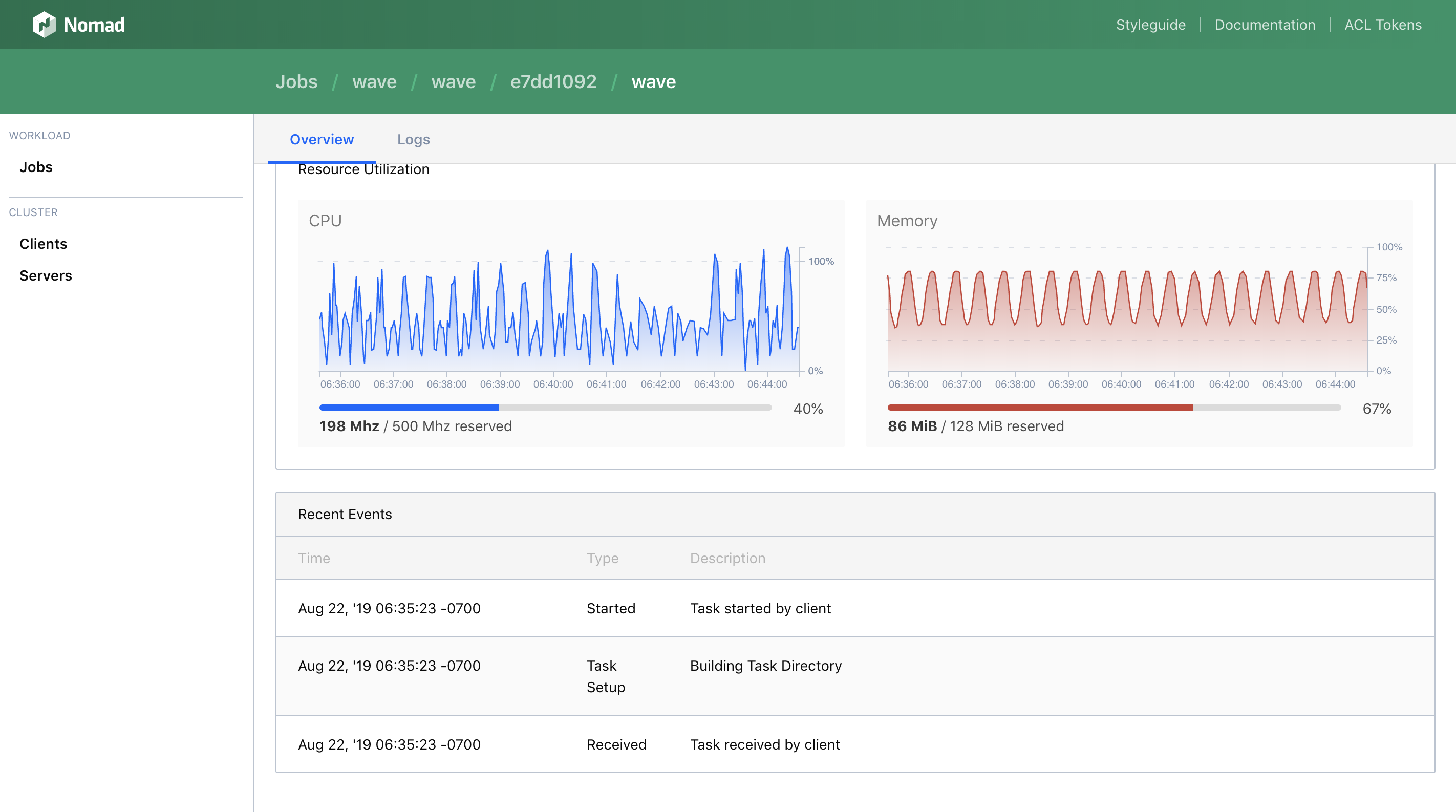

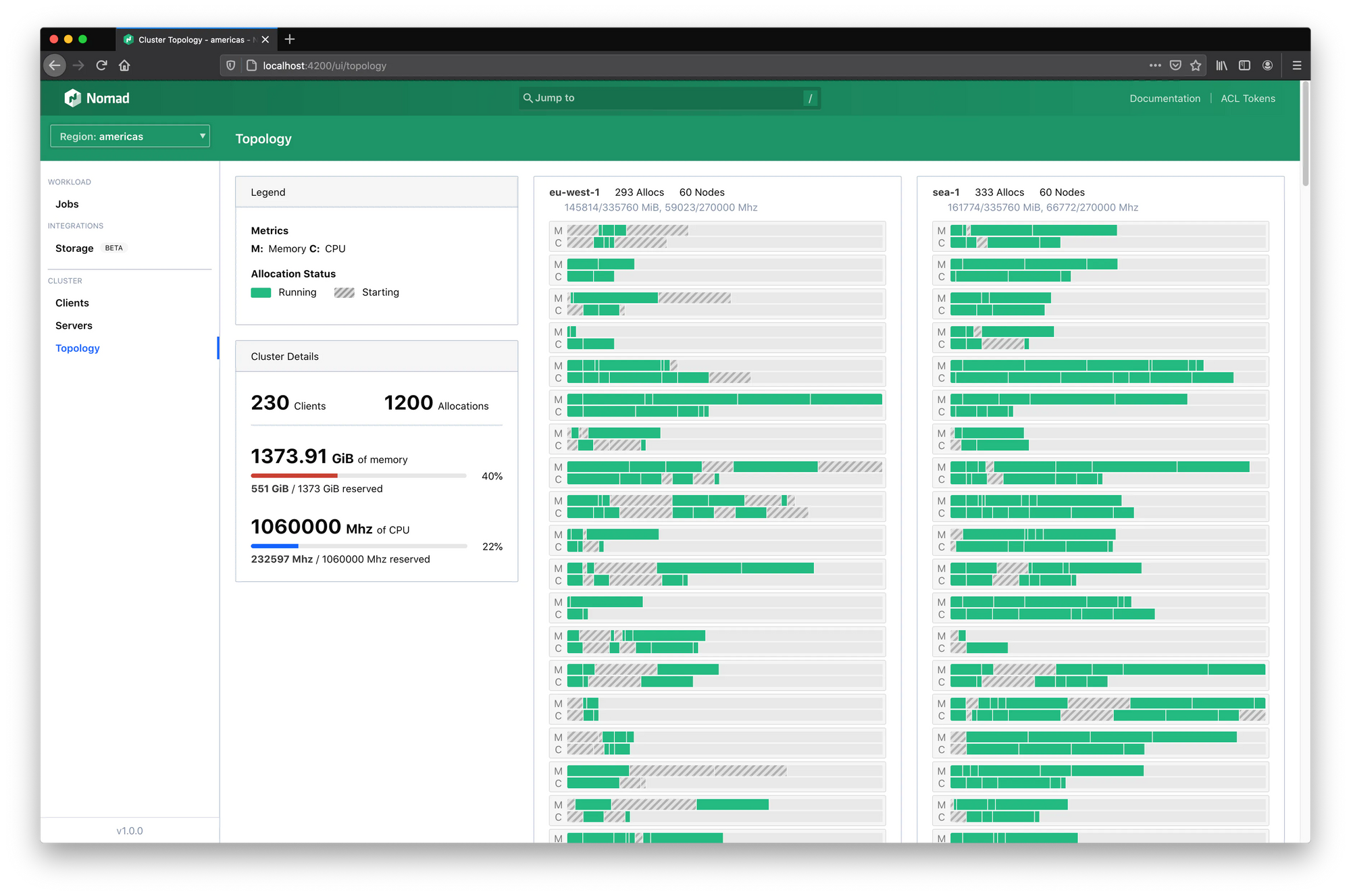

Features: GUI

Hashicorp puts a surprising amount of work into building the Nomad GUI.

New features are added in each release and it doesn't feel like an afterthought as it is in Kubernetes.

Not a big deal for many but in my view, it's all about being focused on simplicity and ease of use.

Documentation

It's a very subjective topic but in my view, Hashicorp has some of the best documentation out there for all of their products. It looks good, it's properly structured and it's informative.

The only complaint I have is that some use cases could not be covered and you'll have to search for a working solution through GitHub issues and blog posts, CNI is a good example here.

Some criticism

It's fair to say that we're very happy with Nomad but it's not all ponies and rainbows.

I already mention that documentation lacks some very specific use cases and since Nomad adoption is quite small compared to Kubernetes you may run into situations when you won't be able to easily find a solution to your problem.

Nomad doesn't have advanced orchestration functionality like Operators in Kubernetes or just StatefulSets so I'd argue that Nomad could be a bad choice for workloads with complicated bootstrap or lifecycle.

Nomad just recently introduced Pack which is a Nomad take on the Helm. I'm not a fan of both but I see how the lack of something like this could be disappointing for people coming from Kubernetes.

Hashicorp products are based on a freemium model, meaning all of their products have an OSS version and an enterprise version with bells and whistles. For Nomad it means you won't be able to use features like quotas and Sentinel without paying.

And Hashicorp's price model is "call us" so it depends.

Nomad integration with Consul and Vault is great but sometimes some features are just not supported(yet), like the configuration of Consul Intentions from Nomad.

Finally, Nomad does have some irritating bugs that are not resolved for quite some time. We were mostly concerned about the Iptables reconciliation bug that was finally fixed in 1.5.x.

Conclusion

Back in Q4 2021 we finally accepted the fact that we'll need to migrate containers-based orchestration and we'll need a system to manage that.

My previous experience with Kubernetes told me that it's going to be a bad idea to jump on the Kubernetes hype train with the Behavox specifics. Managing up to hundred isolated Kubernetes clusters is no joke, and would require additional hiring and maybe a dedicated team.

I wanted a solution that could be onboarded by the current team and would require close to zero effort to run and manage and Nomad was a perfect fit.

We already used Consul and Vault so it was easier for us to add Nomad and use it to its full capacity.

We have been running dozens of Nomad clusters for a year and we only had one major issue during the update to 1.4.x which could be prevented if we'd read the upgrade notes a bit more carefully.

Stay tuned for a more in-depth article on how Behavox deploys, manages, and configures Nomad and its workloads!